Matches in Nanopublications for { ?s <http://schema.org/description> ?o ?g. }

- DestinE_ESA_GFTS description "These webpages are rendered from GitHub repository and contain all the information about the GFTS project. This includes internal description of the use case, technical documentation, progress, presentations, etc." assertion.

- 2edcfa66-0f59-42f4-aa29-1c5681466424 description "**Use Case topic**: The goal of this use case is the development and implementation of the Global Fish Tracking System (GFTS) to enhance understanding and management of wild fish stocks **Scale of the Use Case (Global/Regional/National)**: Local to Global (various locations worldwide) **Policy addressed**: Fisheries Management Policy **Data Sources used**: Climate Change Adaptation (Climate DT: Routine and On-Demand for some higher resolution tracking), Sea Temperature observation (Satelite, in-situ) Copernicus Marine services (Sea temperature and associated value), Bathymetry (Gebco), biologging fish data **Github Repository**: [https://github.com/destination-earth/DestinE_ESA_GFTS.git](https://github.com/destination-earth/DestinE_ESA_GFTS.git)" assertion.

- e0dc162e-b276-4ba6-ac7d-8122e3cf8daa description "This folder contains presentations or other kind of materials (such as training material) developed and presented during events." assertion.

- f4351d12-996f-42c0-a920-dbc4513691c5 description "This folder contains project documents such as DMP, link to website and github repository, etc." assertion.

- 9b1c9bc2-f28a-449d-be32-0b36fe29ab1c description "This pitcure shows Tina Odaka presenting the Global Fish Tracking System (GFTS) DestinE DESP Use Case at the 8th International Bio-logging Science Symposium, Tokyo, Japan (4-8 March 2024)." assertion.

- a09a17f7-75cb-4d19-8166-aea9308ce506 description "Slide extracted from the presentation to the 3rd Destination Earth User eXchange (2024)." assertion.

- egusphere-egu24-10741 description "Poster presentated at EGU 2024." assertion.

- egusphere-egu24-15500 description "Presentation given at EGU 2024." assertion.

- zenodo.10213946 description "Presentation given at the kick-off meting of the GFTS project." assertion.

- zenodo.10372387 description "Slides presented by Mathieu Woillez at the Roadshow Webinar: DestinE in action – meet the first DESP use cases (13 December 2023)" assertion.

- zenodo.10809819 description "Poster presented at the 8th InternationalBio-logging Science Symposium by Tina Odaka, March 2024." assertion.

- zenodo.11185948 description "Project Management Plan for the Global fish Tracking System Use Case on the DestinE Platform." assertion.

- zenodo.11186084 description "Deliverable 5.2 - Use Case Descriptor for the Global fish Tracking System Use Case on the DestinE Platform." assertion.

- zenodo.11186123 description "The Gobal Fish track system Use case Application on the DestinE Platform" assertion.

- zenodo.11186179 description "Deliverable 5.5 corresponding to the Global Fish tracking System Use Case Promotion Package" assertion.

- zenodo.11186191 description "This report corresponds to the Software Reuse File for the GFTS DestinE Platform Use Case. New version will be uploaded regularly." assertion.

- zenodo.11186227 description "The Software Release Plan for the Global Fish Tracking System DestinE Use Case." assertion.

- zenodo.11186257 description "The Software Requirement Specifications for the Global fish Tracking System DestinE Use Case." assertion.

- zenodo.11186288 description "The Software Verification and Validation Plan for the Global fish Tracking System DestinE Use Case." assertion.

- zenodo.11186318 description "The Software Verification and Validation Report from the Global Fish Tracking System DestinE Use Case." assertion.

- zenodo.13908850 description "Poster presented at the 2nd DestinE User eXchange Conference." assertion.

- pangeo_openeo.png description "Image used to illustrate the joint collaboration between Pangeo and OpenEO." assertion.

- pangeo-openeo-BiDS-2023 description "It points to the rendered version of the Pangeo & OpenEO training material that was generated with Jupyter book. Please bear in mind that it links to the latest version and not necessarily to the exact version used during the training. The bids2023 release is the source code (markdown & Jupyter book) used for the actual training." assertion.

- 80e2215b-49cb-456e-9ae9-803f3bcdbba3 description "BiDS - Big Data from Space Big Data from Space 2023 (BiDS) brings together key actors from industry, academia, EU entities and government to reveal user needs, exchange ideas and showcase latest technical solutions and applications touching all aspects of space and big data technologies. The 2023 edition of BiDS will focus not only on the technologies enabling insight and foresight inferable from big data, but will emphasize how these technologies impact society. More information can be found on the BiDS’23 website. ## Pangeo & OpenEO tutorial The tutorials are divided in 3 parts: - Introduction to Pangeo - Introduction to OpenEO - Unlocking the Power of Space Data with Pangeo & OpenEO The workshop timelines, setup and content are accessible online at [https://pangeo-data.github.io/pangeo-openeo-BiDS-2023](https://pangeo-data.github.io/pangeo-openeo-BiDS-2023)." assertion.

- 0da58fff-a17b-4ec7-97d1-3eb9cb89e1cf description "Jupyter Book source code that was collaboratively developed to deliver Pangeo & OpenEO training at BiDS 2023. All the Jupyter Notebooks are made available under MIT license.The tutorial is written in markdown and can be rendered in HTML using Jupyter book." assertion.

- dd5c3d62-b632-46a1-99e4-761f2e6cb60d description dd5c3d62-b632-46a1-99e4-761f2e6cb60d assertion.

- dd5c3d62-b632-46a1-99e4-761f2e6cb60d description "## Summary HPPIDiscovery is a scientific workflow to augment, predict and perform an insilico curation of host-pathogen Protein-Protein Interactions (PPIs) using graph theory to build new candidate ppis and machine learning to predict and evaluate them by combining multiple PPI detection methods of proteins according to three categories: structural, based on primary aminoacid sequence and functional annotations.<br> HPPIDiscovery contains three main steps: (i) acquirement of pathogen and host proteins information from seed ppis provided by HPIDB search methods, (ii) Model training and generation of new candidate ppis from HPIDB seed proteins' partners, and (iii) Evaluation of new candidate ppis and results exportation. (i) The first step acquires the identification of the taxonomy ids of the host and pathogen organisms in the result files. Then it proceeds parsing and cleaning the HPIDB results and downloading the protein interactions of the found organisms from the STRING database. The string protein identifiers are also mapped using the id mapping tool of uniprot API and we retrieve the uniprot entry ids along with the functional annotations, sequence, domain and kegg enzymes. (ii) The second step builds the training dataset using the non redundant hpidb validated interactions of each genome as positive set and random string low confidence ppis from each genome as negative set. Then, PredPrin tool is executed in the training mode to obtain the model that will evaluate the new candidate PPIs. The new ppis are then generated by performing a pairwise combination of string partners of host and pathogen hpidb proteins. Finally, (iii) in the third step, the predprin tool is used in the test mode to evaluate the new ppis and generate the reports and list of positively predicted ppis. The figure below illustrates the steps of this workflow. ## Requirements: * Edit the configuration file (config.yaml) according to your own data, filling out the following fields: - base_data: location of the organism folders directory, example: /home/user/data/genomes - parameters_file: Since this workflow may perform parallel processing of multiple organisms at the same time, you must prepate a tabulated file containng the genome folder names located in base data, where the hpidb files are located. Example: /home/user/data/params.tsv. It must have the following columns: genome (folder name), hpidb_seed_network (the result exported by one of the search methods available in hpidb database), hpidb_search_method (the type of search used to generate the results) and target_taxon (the target taxon id). The column hpidb_source may have two values: keyword or homology. In the keyword mode, you provide a taxonomy, protein name, publication id or detection method and you save all results (mitab.zip) in the genome folder. Finally, in the homology mode allows the user to search for host pathogen ppis giving as input fasta sequences of a set of proteins of the target pathgen for enrichment (so you have to select the search for a pathogen set) and you save the zip folder results (interaction data) in the genome folder. This option is extremely useful when you are not sure that your organism has validated protein interactions, then it finds validated interactions from the closest proteins in the database. In case of using the homology mode, the identifiers of the pathogens' query fasta sequences must be a Uniprot ID. All the query protein IDs must belong to the same target organism (taxon id). - model_file: path of a previously trained model in joblib format (if you want to train from the known validated PPIs given as seeds, just put a 'None' value) ## Usage Instructions The steps below consider the creation of a sqlite database file with all he tasks events which can be used after to retrieve the execution time taken by the tasks. It is possible run locally too (see luigi's documentation to change the running command). <br><br> * Preparation: 1. ````git clone https://github.com/YasCoMa/hppidiscovery.git```` 2. ````cd hppidiscovery```` 3. ````mkdir luigi_log```` 4. ````luigid --background --logdir luigi_log```` (start luigi server) 5. conda env create -f hp_ppi_augmentation.yml 6. conda activate hp_ppi_augmentation 6.1. (execute ````pip3 install wget```` (it is not installed in the environment)) 7. run ````pwd```` command and get the full path 8. Substitute in config_example.yaml with the full path obtained in the previous step 9. Download SPRINT pre-computed similarities in https://www.csd.uwo.ca/~ilie/SPRINT/precomputed_similarities.zip and unzip it inside workflow_hpAugmentation/predprin/core/sprint/HSP/ 10. ````cd workflow_hpAugmentation/predprin/```` 11. Uncompress annotation_data.zip 12. Uncompress sequence_data.zip 13. ````cd ../../```` 14. ````cd workflow_hpAugmentation```` 15. snake -n (check the plan of jobs, it should return no errors and exceptions) 16. snakemake -j 4 (change this number according the number of genomes to analyse and the amount of cores available in your machine)" assertion.

- 13c69a83-de3f-4379-b137-6a12d45bf6e7 description "## Summary HPPIDiscovery is a scientific workflow to augment, predict and perform an insilico curation of host-pathogen Protein-Protein Interactions (PPIs) using graph theory to build new candidate ppis and machine learning to predict and evaluate them by combining multiple PPI detection methods of proteins according to three categories: structural, based on primary aminoacid sequence and functional annotations.<br> HPPIDiscovery contains three main steps: (i) acquirement of pathogen and host proteins information from seed ppis provided by HPIDB search methods, (ii) Model training and generation of new candidate ppis from HPIDB seed proteins' partners, and (iii) Evaluation of new candidate ppis and results exportation. (i) The first step acquires the identification of the taxonomy ids of the host and pathogen organisms in the result files. Then it proceeds parsing and cleaning the HPIDB results and downloading the protein interactions of the found organisms from the STRING database. The string protein identifiers are also mapped using the id mapping tool of uniprot API and we retrieve the uniprot entry ids along with the functional annotations, sequence, domain and kegg enzymes. (ii) The second step builds the training dataset using the non redundant hpidb validated interactions of each genome as positive set and random string low confidence ppis from each genome as negative set. Then, PredPrin tool is executed in the training mode to obtain the model that will evaluate the new candidate PPIs. The new ppis are then generated by performing a pairwise combination of string partners of host and pathogen hpidb proteins. Finally, (iii) in the third step, the predprin tool is used in the test mode to evaluate the new ppis and generate the reports and list of positively predicted ppis. The figure below illustrates the steps of this workflow. ## Requirements: * Edit the configuration file (config.yaml) according to your own data, filling out the following fields: - base_data: location of the organism folders directory, example: /home/user/data/genomes - parameters_file: Since this workflow may perform parallel processing of multiple organisms at the same time, you must prepate a tabulated file containng the genome folder names located in base data, where the hpidb files are located. Example: /home/user/data/params.tsv. It must have the following columns: genome (folder name), hpidb_seed_network (the result exported by one of the search methods available in hpidb database), hpidb_search_method (the type of search used to generate the results) and target_taxon (the target taxon id). The column hpidb_source may have two values: keyword or homology. In the keyword mode, you provide a taxonomy, protein name, publication id or detection method and you save all results (mitab.zip) in the genome folder. Finally, in the homology mode allows the user to search for host pathogen ppis giving as input fasta sequences of a set of proteins of the target pathgen for enrichment (so you have to select the search for a pathogen set) and you save the zip folder results (interaction data) in the genome folder. This option is extremely useful when you are not sure that your organism has validated protein interactions, then it finds validated interactions from the closest proteins in the database. In case of using the homology mode, the identifiers of the pathogens' query fasta sequences must be a Uniprot ID. All the query protein IDs must belong to the same target organism (taxon id). - model_file: path of a previously trained model in joblib format (if you want to train from the known validated PPIs given as seeds, just put a 'None' value) ## Usage Instructions The steps below consider the creation of a sqlite database file with all he tasks events which can be used after to retrieve the execution time taken by the tasks. It is possible run locally too (see luigi's documentation to change the running command). <br><br> * Preparation: 1. ````git clone https://github.com/YasCoMa/hppidiscovery.git```` 2. ````cd hppidiscovery```` 3. ````mkdir luigi_log```` 4. ````luigid --background --logdir luigi_log```` (start luigi server) 5. conda env create -f hp_ppi_augmentation.yml 6. conda activate hp_ppi_augmentation 6.1. (execute ````pip3 install wget```` (it is not installed in the environment)) 7. run ````pwd```` command and get the full path 8. Substitute in config_example.yaml with the full path obtained in the previous step 9. Download SPRINT pre-computed similarities in https://www.csd.uwo.ca/~ilie/SPRINT/precomputed_similarities.zip and unzip it inside workflow_hpAugmentation/predprin/core/sprint/HSP/ 10. ````cd workflow_hpAugmentation/predprin/```` 11. Uncompress annotation_data.zip 12. Uncompress sequence_data.zip 13. ````cd ../../```` 14. ````cd workflow_hpAugmentation```` 15. snake -n (check the plan of jobs, it should return no errors and exceptions) 16. snakemake -j 4 (change this number according the number of genomes to analyse and the amount of cores available in your machine)" assertion.

- edit?usp=sharing description "This file uses new_atrix.csv as a starting point and filtered by dates e.g. from 1st January 2022 to 31 December 2022. Then a pivot table is created where sort, q and reslabel were selected for column and c for row." assertion.

- edit?usp=sharing description "This google sheet contains the FIP convergence matrix for year 2022. The tab "matrix" is the main tab while FERs are unique list of FERs and Communities tab the list of unique names for communities." assertion.

- 073ab8fc-67b3-4ec7-915e-17ffb47f09c5 description "This Research Object contains all the data used for producing a FIP convergence matrix for the year 2022. The raw data has been fetch from [https://github.com/peta-pico/dsw-nanopub-api](https://github.com/peta-pico/dsw-nanopub-api) on Thursday 5 October 2023. The original matrix called new_matrix.csv ([https://github.com/peta-pico/dsw-nanopub-api/blob/main/tables/new_matrix.csv](https://github.com/peta-pico/dsw-nanopub-api/blob/main/tables/new_matrix.csv)) is stored in the raw data folder for reference. The methodology used to create the FIP convergence Matrix is detailed in the presentation from Barbara Magagna [https://osf.io/de6su/](https://osf.io/de6su/)." assertion.

- 7683f508-3363-4c9a-8eb2-12d31d7e5a4a description "Partial view of the FIP convergence matrix for illustration purposes only." assertion.

- a55b4924-4d0b-4a17-8a5c-22dce26fcf6c description "This CSV file is the result of a SPARQL query executed by a GitHub action." assertion.

- bc3f8893-19ec-4d72-a1fb-20fc92244634 description "PDF file generated from the FIP convergence Matrix google sheet." assertion.

- 0a79368c-5f22-42b6-b315-3e76354918f9 description "This repository contains the python code to reproduce the experiments in Dłotko, Gurnari "Euler Characteristic Curves and Profiles: a stable shape invariant for big data problems"" assertion.

- ed0379fa-4990-4eb0-8375-3c3572847495 description "This repository contains the python code to reproduce the experiments in Dłotko, Gurnari "Euler Characteristic Curves and Profiles: a stable shape invariant for big data problems"" assertion.

- content description "This Notebook provides a workflow of ArcGis toolboxes to identify ML targets from bathynetry." assertion.

- content description "This Notebook provides a workflow of ArcGis toolboxes to identify ML targets from bathynetry." assertion.

- ro-id.EHMJMDN68Q description "It reads and elaborates ASCII file produced with FM Midwater in order to calculate some statistics, mean, standard deviation of backscatter, depth, angle of net for each ping, and to produce a graph with the track of the net" assertion.

- ro-id.IKMY8URJ9Q description "It calculates the sink velocity of a net floating in water starting from water column data." assertion.

- 058215c9-d7d3-4e78-8f49-5655f899d5e3 description "A dedicated workflow in ArcGIS (shared as Jupyter notebook) was developed to identify targets from the bathymetry within the MAELSTROM Project - Smart technology for MArinE Litter SusTainable RemOval and Management. In that framework, the workflow identified marine litter on the seafloor starting from a bathymetric surface collected in Sacca Fisola (Venice Lagoon) on 2021." assertion.

- 2ca9dde1-bb6c-4a17-8a9c-121bbf83ac7b description "Marine Litter Identification from Bathymetry" assertion.

- 515fd558-2e2e-41e6-a613-71146ba0866c description "EASME/EMFF/2017/1.2.1.12/S2/05/SI2.789314 MarGnet official document with description of ROs developed within MarGnet Project" assertion.

- 51d98bb1-2fc4-4468-8f17-c9c05edb7b57 description "Schema of the ArcGIS toolnested in the Jupyter Notebook" assertion.

- ce7f4ce9-0a6e-41a1-b1ec-f95d1f883c5a description "EASME/EMFF/2017/1.2.1.12/S2/05/SI2.789314 MarGnet official document with description of the tool" assertion.

- df584059-663d-4da3-8eec-d01467544383 description "Workflow requirements" assertion.

- fc64e1c2-a7de-41db-a2b2-372b81cda150 description "EASME/EMFF/2017/1.2.1.12/S2/05/SI2.789314 MarGnet official document with description of the application of the tool" assertion.

- 1873-0604.2012018 description "This paper presents a semi-automated method to recognize, spatially delineate and characterise morphometrically pockmarks at the seabed" assertion.

- 1873-0604.2012018 description "This paper presents a semi-automated method to recognize, spatially delineate and characterise morphometrically pockmarks at the seabed" assertion.

- b9f63328-264e-4d28-94b7-397e50cf2dad description "The shape files contain the marine litter targets both manually identified and obtained as output of the ArcGis workflow applied to the input bathymetry" assertion.

- b9f63328-264e-4d28-94b7-397e50cf2dad description "ArcGis workflow output metadata" assertion.

- 7019724e-b5a0-4f7e-a7d6-a1baacac85df description "# ERGA Protein-coding gene annotation workflow. Adapted from the work of Sagane Joye: https://github.com/sdind/genome_annotation_workflow ## Prerequisites The following programs are required to run the workflow and the listed version were tested. It should be noted that older versions of snakemake are not compatible with newer versions of singularity as is noted here: [https://github.com/nextflow-io/nextflow/issues/1659](https://github.com/nextflow-io/nextflow/issues/1659). `conda v 23.7.3` `singularity v 3.7.3` `snakemake v 7.32.3` You will also need to acquire a licence key for Genemark and place this in your home directory with name `~/.gm_key` The key file can be obtained from the following location, where the licence should be read and agreed to: http://topaz.gatech.edu/GeneMark/license_download.cgi ## Workflow The pipeline is based on braker3 and was tested on the following dataset from Drosophila melanogaster: [https://doi.org/10.5281/zenodo.8013373](https://doi.org/10.5281/zenodo.8013373) ### Input data - Reference genome in fasta format - RNAseq data in paired-end zipped fastq format - uniprot fasta sequences in zipped fasta format ### Pipeline steps - **Repeat Model and Mask** Run RepeatModeler using the genome as input, filter any repeats also annotated as protein sequences in the uniprot database and use this filtered libray to mask the genome with RepeatMasker - **Map RNAseq data** Trim any remaining adapter sequences and map the trimmed reads to the input genome - **Run gene prediction software** Use the mapped RNAseq reads and the uniprot sequences to create hints for gene prediction using Braker3 on the masked genome - **Evaluate annotation** Run BUSCO to evaluate the completeness of the annotation produced ### Output data - FastQC reports for input RNAseq data before and after adapter trimming - RepeatMasker report containing quantity of masked sequence and distribution among TE families - Protein-coding gene annotation file in gff3 format - BUSCO summary of annotated sequences ## Setup Your data should be placed in the `data` folder, with the reference genome in the folder `data/ref` and the transcript data in the foler `data/rnaseq`. The config file requires the following to be given: ``` asm: 'absolute path to reference fasta' snakemake_dir_path: 'path to snakemake working directory' name: 'name for project, e.g. mHomSap1' RNA_dir: 'absolute path to rnaseq directory' busco_phylum: 'busco database to use for evaluation e.g. mammalia_odb10' ```" assertion.

- 7019724e-b5a0-4f7e-a7d6-a1baacac85df description "# ERGA Protein-coding gene annotation workflow. Adapted from the work of Sagane Joye: https://github.com/sdind/genome_annotation_workflow ## Prerequisites The following programs are required to run the workflow and the listed version were tested. It should be noted that older versions of snakemake are not compatible with newer versions of singularity as is noted here: [https://github.com/nextflow-io/nextflow/issues/1659](https://github.com/nextflow-io/nextflow/issues/1659). `conda v 23.7.3` `singularity v 3.7.3` `snakemake v 7.32.3` You will also need to acquire a licence key for Genemark and place this in your home directory with name `~/.gm_key` The key file can be obtained from the following location, where the licence should be read and agreed to: http://topaz.gatech.edu/GeneMark/license_download.cgi ## Workflow The pipeline is based on braker3 and was tested on the following dataset from Drosophila melanogaster: [https://doi.org/10.5281/zenodo.8013373](https://doi.org/10.5281/zenodo.8013373) ### Input data - Reference genome in fasta format - RNAseq data in paired-end zipped fastq format - uniprot fasta sequences in zipped fasta format ### Pipeline steps - **Repeat Model and Mask** Run RepeatModeler using the genome as input, filter any repeats also annotated as protein sequences in the uniprot database and use this filtered libray to mask the genome with RepeatMasker - **Map RNAseq data** Trim any remaining adapter sequences and map the trimmed reads to the input genome - **Run gene prediction software** Use the mapped RNAseq reads and the uniprot sequences to create hints for gene prediction using Braker3 on the masked genome - **Evaluate annotation** Run BUSCO to evaluate the completeness of the annotation produced ### Output data - FastQC reports for input RNAseq data before and after adapter trimming - RepeatMasker report containing quantity of masked sequence and distribution among TE families - Protein-coding gene annotation file in gff3 format - BUSCO summary of annotated sequences ## Setup Your data should be placed in the `data` folder, with the reference genome in the folder `data/ref` and the transcript data in the foler `data/rnaseq`. The config file requires the following to be given: ``` asm: 'absolute path to reference fasta' snakemake_dir_path: 'path to snakemake working directory' name: 'name for project, e.g. mHomSap1' RNA_dir: 'absolute path to rnaseq directory' busco_phylum: 'busco database to use for evaluation e.g. mammalia_odb10' ``` " assertion.

- 11f3e069-8d1d-48da-876f-52fd6d255223 description "# ERGA Protein-coding gene annotation workflow. Adapted from the work of Sagane Joye: https://github.com/sdind/genome_annotation_workflow ## Prerequisites The following programs are required to run the workflow and the listed version were tested. It should be noted that older versions of snakemake are not compatible with newer versions of singularity as is noted here: [https://github.com/nextflow-io/nextflow/issues/1659](https://github.com/nextflow-io/nextflow/issues/1659). `conda v 23.7.3` `singularity v 3.7.3` `snakemake v 7.32.3` You will also need to acquire a licence key for Genemark and place this in your home directory with name `~/.gm_key` The key file can be obtained from the following location, where the licence should be read and agreed to: http://topaz.gatech.edu/GeneMark/license_download.cgi ## Workflow The pipeline is based on braker3 and was tested on the following dataset from Drosophila melanogaster: [https://doi.org/10.5281/zenodo.8013373](https://doi.org/10.5281/zenodo.8013373) ### Input data - Reference genome in fasta format - RNAseq data in paired-end zipped fastq format - uniprot fasta sequences in zipped fasta format ### Pipeline steps - **Repeat Model and Mask** Run RepeatModeler using the genome as input, filter any repeats also annotated as protein sequences in the uniprot database and use this filtered libray to mask the genome with RepeatMasker - **Map RNAseq data** Trim any remaining adapter sequences and map the trimmed reads to the input genome - **Run gene prediction software** Use the mapped RNAseq reads and the uniprot sequences to create hints for gene prediction using Braker3 on the masked genome - **Evaluate annotation** Run BUSCO to evaluate the completeness of the annotation produced ### Output data - FastQC reports for input RNAseq data before and after adapter trimming - RepeatMasker report containing quantity of masked sequence and distribution among TE families - Protein-coding gene annotation file in gff3 format - BUSCO summary of annotated sequences ## Setup Your data should be placed in the `data` folder, with the reference genome in the folder `data/ref` and the transcript data in the foler `data/rnaseq`. The config file requires the following to be given: ``` asm: 'absolute path to reference fasta' snakemake_dir_path: 'path to snakemake working directory' name: 'name for project, e.g. mHomSap1' RNA_dir: 'absolute path to rnaseq directory' busco_phylum: 'busco database to use for evaluation e.g. mammalia_odb10' ```" assertion.

- a6376631-ef64-4c7a-b242-893d096fd33b description "The research object refers to the Variational data assimilation with deep prior (CIRC23) notebook published in the Environmental Data Science book." assertion.

- 007d800b-a7b7-4fc5-b18b-549648b77006 description "Contains outputs, (figures, models and results), generated in the Jupyter notebook of Variational data assimilation with deep prior (CIRC23)" assertion.

- 176438b9-54bb-4878-9a80-4a40b2a98877 description "Related publication of the modelling presented in the Jupyter notebook" assertion.

- 28318869-f7cb-43fa-bbf4-44ad75a37cdf description "Contains input Codebase of the reproduced paper used in the Jupyter notebook of Variational data assimilation with deep prior (CIRC23)" assertion.

- 5864b695-06f0-4cbb-8249-dfd58c6840e0 description "Lock conda file of the Jupyter notebook hosted by the Environmental Data Science Book" assertion.

- 96bda249-e769-4853-acf8-9989326765f5 description "Jupyter Notebook hosted by the Environmental Data Science Book" assertion.

- a8665cdc-b1de-4c19-b18b-2793a0fe77e2 description "Rendered version of the Jupyter Notebook hosted by the Environmental Data Science Book" assertion.

- b76ff147-ca03-4d55-aeaf-3533398b4547 description "Conda environment when user want to have the same libraries installed without concerns of package versions" assertion.

- f58b5532-99d4-44fd-aa97-188e9885e0e7 description "Analise for usage of Software for SME Business in Ferizaj." assertion.

- da3e1263-e472-48be-9f0e-a287ad4ca28b description "Creating and streamlining virtual environments with **OpenMP** to use parallelization with **Fortran** code on the field of theoretical solid state physics." assertion.

- 5298 description "" assertion.

- 709 description "" assertion.

- 58507a37-ee00-4173-9c7d-f0bd8effa41d description "The aim of this project is to evaluate the perceptions and approaches of parents toward childhood vaccines and especially children's vaccination with covid-19 vaccines." assertion.

- 5881 description "" assertion.

- c53c0d20-4754-45a3-b863-93d11e653520 description "> Research"  of digitalisation and AI integration in employee engagement in SMEs," assertion.

- 38051187-5ecf-4bcd-86a2-2110bda04a83 description "Description for this test project" assertion.

- 81930c86-66d9-40aa-922e-3eb71a7520ef description "The research object refers to the Concatenating a gridded rainfall reanalysis dataset into a time series notebook published in the Environmental Data Science book." assertion.

- 0fa56f9b-073f-4cfa-9af7-c4fc97561bef description "Contains outputs, (figures), generated in the Jupyter notebook of Concatenating a gridded rainfall reanalysis dataset into a time series" assertion.

- 26a79ea5-8a6d-49ab-a586-0667f0e61bab description "Related publication of the exploration presented in the Jupyter notebook" assertion.

- 55beb690-5dc3-4b73-8d48-282d2cd80e5d description "Conda environment when user want to have the same libraries installed without concerns of package versions" assertion.

- 6810340b-a8e6-455f-8852-87027145f60d description "Rendered version of the Jupyter Notebook hosted by the Environmental Data Science Book" assertion.

- 72464c70-1c24-41c7-8cbb-f1ce006286e6 description "Jupyter Notebook hosted by the Environmental Data Science Book" assertion.

- 75a693fc-75cb-42cf-960a-0de69034c9ae description "Pip requirements file containing libraries to install after conda lock" assertion.

- ac40c997-ba8e-42a7-a8b0-1a56211dd2df description "Related publication of the exploration presented in the Jupyter notebook" assertion.

- b58c7815-b88e-4098-ab5b-56333cd46028 description "Lock conda file for osx-64 OS of the Jupyter notebook hosted by the Environmental Data Science Book" assertion.

- c1f6b632-95e1-4872-a619-11a4e042e530 description "Contains input of the Jupyter Notebook - Concatenating a gridded rainfall reanalysis dataset into a time series used in the Jupyter notebook of Concatenating a gridded rainfall reanalysis dataset into a time series" assertion.

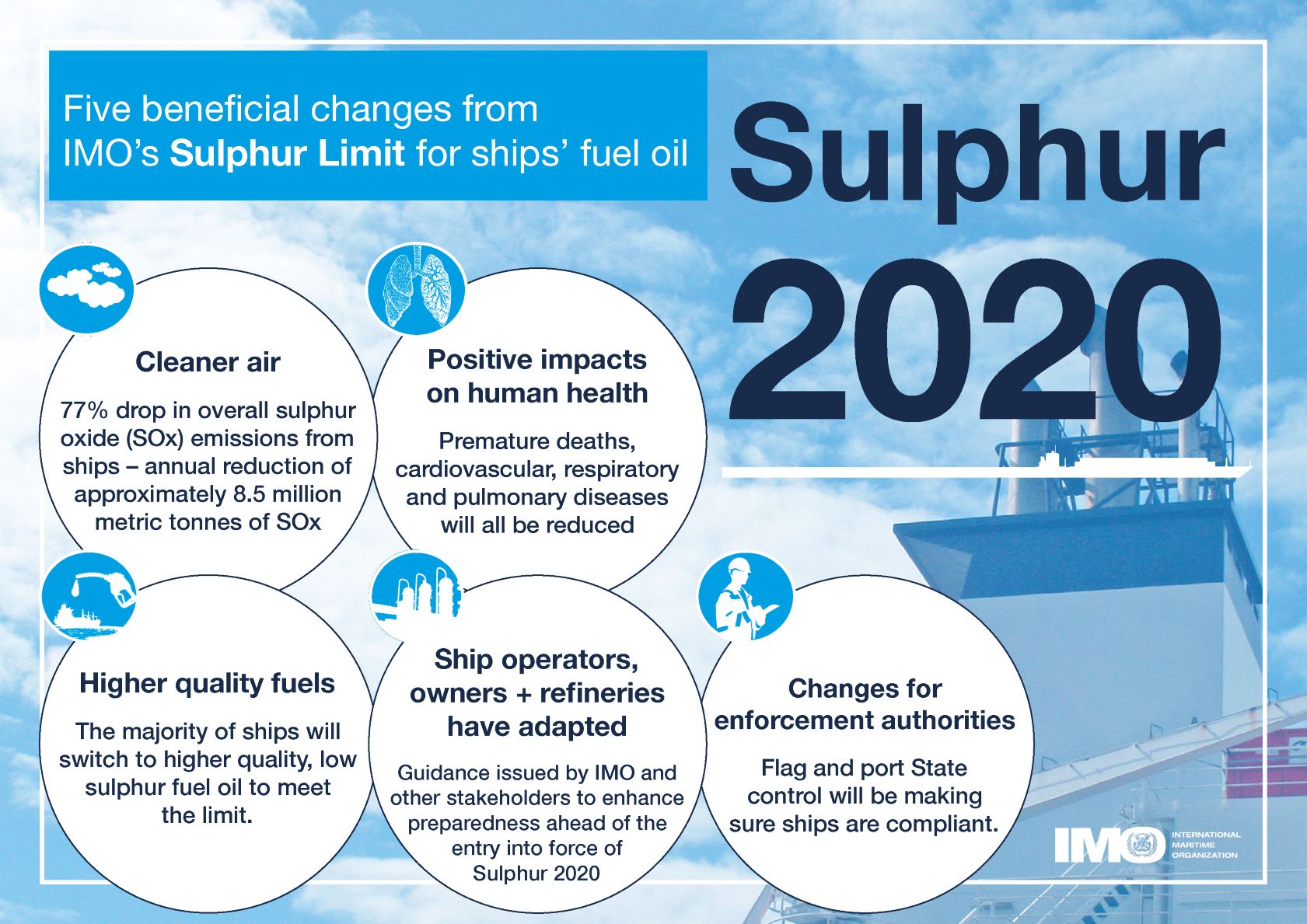

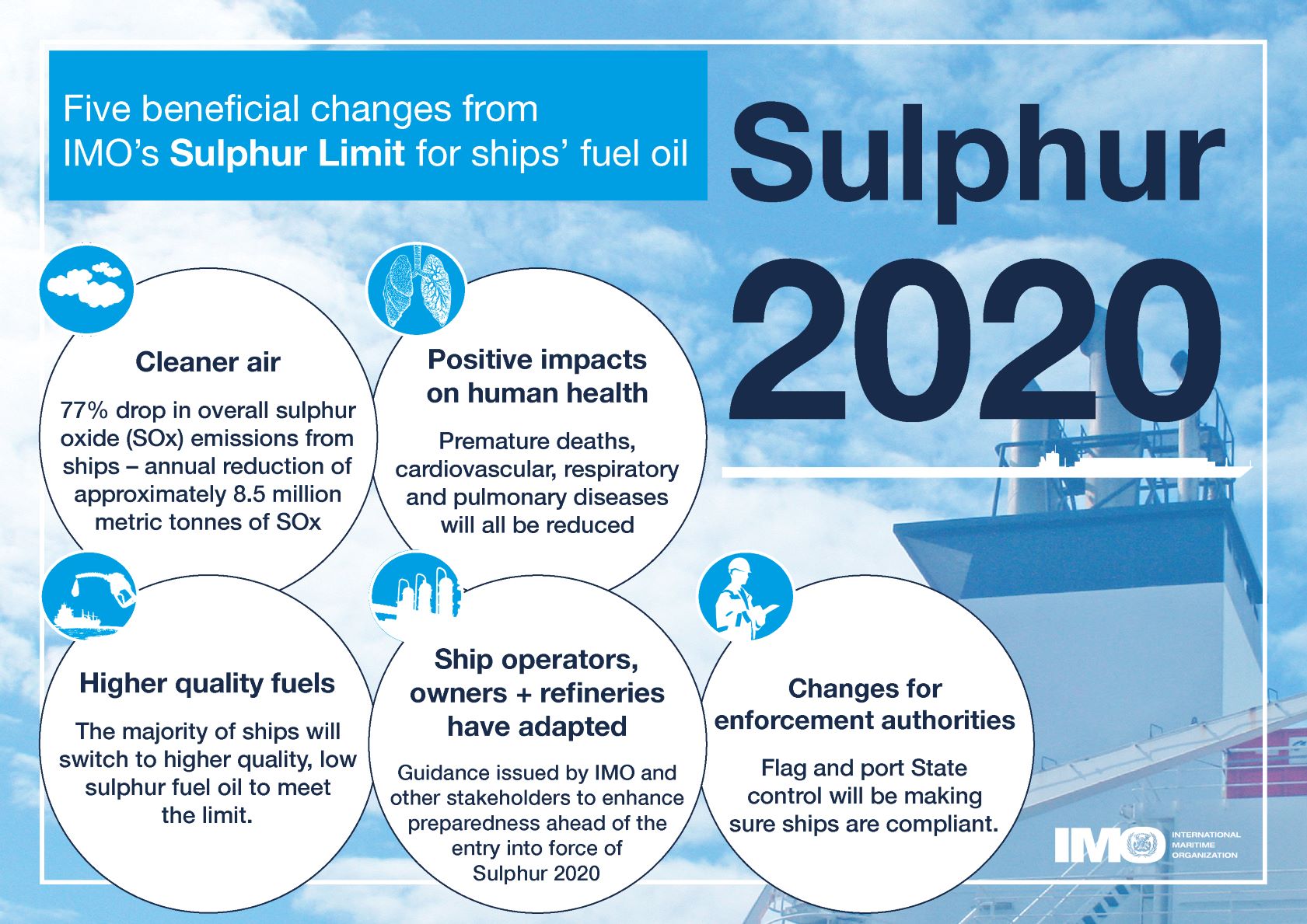

- 075e9430-5949-4a09-8622-d20916994eaa description "## Rationale From 1st January 2020 the global upper limit on the sulphur content of ships' fuel oil was reduced from 3.50% to 0.50%, which represents an ~86% cut (from [https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)).  *Image from [IMO 2020 - cleaner shipping for cleaner air, 20 December 2019](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)* According to the [International Maritime Organization (IMO)](https://www.imo.org/) the new limit should lead to a 77% drop in overall SOx emissions from ships. The Figure below shows the 5 key beneficial changes from IMO's **Sulphur Limit** for Ships' fuel oil:  *Five beneficial changes from IMO’s Sulphur Limit for ships’ fuel oil* ## This Research Object's purpose In this work we look as the **actual impact of these measures on air pollution along shipping routes in Europe** based on Copernicus air quality data. [Copernicus Atmosphere Monitoring Service (CAMS)](https://ads.atmosphere.copernicus.eu/cdsapp#!/home) uses satellite data and other observations, together with computer models, to track the accumulation and movement of air pollutants around the planet (see [https://atmosphere.copernicus.eu/air-quality](https://atmosphere.copernicus.eu/air-quality)). ### Rohub - Adam plateform integration Some of this CAMS data is available from the [Adam platform](https://adamplatform.eu) and can be imported into a [Research Object](https://www.researchobject.org).  *Example of data (here daily temperatures) displayed on the Adam plateform* It is also possible, from the Research Object, to open the resource in the Adam platform, then interactively zoom into a particular geographical area (say to the right of the Strait of Gibraltar, along the track presumably followed by cargo ships to/from the Suez Canal) and change the date (for example between 2018-07-19 and 2023-07-19) to appreciate the change. #### To go further Obviously **a more detailed statistical analysis** would be required to minimize the effect of external factors (meteorological condition, level of cargo traffic, etc.) over a longer period of time to derive meaningful conclusions, however it does not seem that the high level of sulfur dioxide concentration from the pre-IMO regulation was reached after. ##### Looking at other pollutants Besides SOx the new regulation also contributed to decrease atmospheric concentrations in nitric oxides (NOx) as well as particulate matter (PM). ### References - [IMO 2020 - cleaner shipping for cleaner air, 20 December 2019](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)" assertion.

- 075e9430-5949-4a09-8622-d20916994eaa description "## Rationale From 1st January 2020 the global upper limit on the sulphur content of ships' fuel oil was reduced from 3.50% to 0.50%, which represents an ~86% cut (from [https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)).  *Image from [IMO 2020 - cleaner shipping for cleaner air, 20 December 2019](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)* According to the [International Maritime Organization (IMO)](https://www.imo.org/) the new limit should lead to a 77% drop in overall SOx emissions from ships. The Figure below shows the 5 key beneficial changes from IMO's **Sulphur Limit** for Ships' fuel oil:  *Five beneficial changes from IMO’s Sulphur Limit for ships’ fuel oil* ## This Research Object's purpose In this work we look as the **actual impact of these measures on air pollution along shipping routes in Europe** based on Copernicus air quality data. [Copernicus Atmosphere Monitoring Service (CAMS)](https://ads.atmosphere.copernicus.eu/cdsapp#!/home) uses satellite data and other observations, together with computer models, to track the accumulation and movement of air pollutants around the planet (see [https://atmosphere.copernicus.eu/air-quality](https://atmosphere.copernicus.eu/air-quality)). ### Rohub - Adam plateform integration Some of this CAMS data is available from the [Adam platform](https://adamplatform.eu) and can be imported into a [Research Object](https://www.researchobject.org).  *Example of data (here daily temperatures) displayed on the Adam plateform* It is also possible, from the Research Object, to open the resource in the Adam platform, then interactively zoom into a particular geographical area (say to the right of the Strait of Gibraltar, along the track presumably followed by cargo ships to/from the Suez Canal) and change the date (for example between 2018-07-19 and 2023-07-19) to appreciate the change. #### To go further Obviously **a more detailed statistical analysis** would be required to minimize the effect of external factors (meteorological condition, level of cargo traffic, etc.) over a longer period of time to derive meaningful conclusions, however it does not seem that the high level of sulfur dioxide concentration from the pre-IMO regulation was reached after. ##### Looking at other pollutants Besides SOx the new regulation also contributed to decrease atmospheric concentrations in nitric oxides (NOx) as well as particulate matter (PM). ### References - [IMO 2020 - cleaner shipping for cleaner air, 20 December 2019](https://www.imo.org/en/MediaCentre/PressBriefings/pages/34-IMO-2020-sulphur-limit-.aspx)" assertion.

- 373f5793-4997-4854-a0c3-4ef89e0d554d description "The quality of the air we breathe can significantly impact our health and the environment. CAMS monitors and forecasts European air quality and worldwide long-range transport of pollutants." assertion.

- 3a4680dd-67ef-438f-bd0c-3ef031bbec0a description "Five beneficial changes from IMO's Sulphur Limit for ships' fuel oil" assertion.

- 5d873985-fdd4-4ac0-9249-53290fa3c8f5 description "This report examines the potential of electrofuels (e-fuels) to decarbonise long-haul aviation and maritime shipping. E-fuels like hydrogen, ammonia, e-methanol or e-kerosene can be produced from renewable energy and feedstocks and are more economical to deploy in these two modes than direct electrification. The analysis evaluates the challenges and opportunities related to e-fuel production technologies and feedstock options to identify priorities for making e-fuels cheaper and maximising emissions cuts. The research also explores operational requirements for the two sectors to deploy e-fuels and how governments can assist in adopting low-carbon fuels" assertion.

- a219dc91-cd87-455d-a2d6-3f5b83f0892c description "Nitrogen Oxide" assertion.

- ca3936e2-7b25-4776-ae9b-d1f9f5225bb1 description "Sulphur dioxide (SO2) from Copernicus Atmosphere Monitoring Service" assertion.

- e8752e1f-7c59-4b99-b1db-9e3e31e2a45c description "IMI news: Global limit on sulphur in ships' fuel oil reduced from 01 January 2020." assertion.

- ea4e5a1d-3ce7-4438-af08-15fdd453600a description "[](https://snakemake.readthedocs.io) # About SnakeMAGs SnakeMAGs is a workflow to reconstruct prokaryotic genomes from metagenomes. The main purpose of SnakeMAGs is to process Illumina data from raw reads to metagenome-assembled genomes (MAGs). SnakeMAGs is efficient, easy to handle and flexible to different projects. The workflow is CeCILL licensed, implemented in Snakemake (run on multiple cores) and available for Linux. SnakeMAGs performed eight main steps: - Quality filtering of the reads - Adapter trimming - Filtering of the host sequences (optional) - Assembly - Binning - Evaluation of the quality of the bins - Classification of the MAGs - Estimation of the relative abundance of the MAGs  # How to use SnakeMAGs ## Install conda The easiest way to install and run SnakeMAGs is to use [conda](https://www.anaconda.com/products/distribution). These package managers will help you to easily install [Snakemake](https://snakemake.readthedocs.io/en/stable/getting_started/installation.html). ## Install and activate Snakemake environment Note: The workflow was developed with Snakemake 7.0.0 ``` conda activate # First, set up your channel priorities conda config --add channels defaults conda config --add channels bioconda conda config --add channels conda-forge # Then, create a new environment for the Snakemake version you require conda create -n snakemake_7.0.0 snakemake=7.0.0 # And activate it conda activate snakemake_7.0.0 ``` Alternatively, you can also install Snakemake via mamba: ``` # If you do not have mamba yet on your machine, you can install it with: conda install -n base -c conda-forge mamba # Then you can install Snakemake conda activate base mamba create -c conda-forge -c bioconda -n snakemake snakemake # And activate it conda activate snakemake ``` ## SnakeMAGs executable The easiest way to procure SnakeMAGs and its related files is to clone the repository using git: ``` git clone https://github.com/Nachida08/SnakeMAGs.git ``` Alternatively, you can download the relevant files: ``` wget https://github.com/Nachida08/SnakeMAGs/blob/main/SnakeMAGs.smk https://github.com/Nachida08/SnakeMAGs/blob/main/config.yaml ``` ## SnakeMAGs input files - Illumina paired-end reads in FASTQ. - Adapter sequence file ([adapter.fa](https://github.com/Nachida08/SnakeMAGs/blob/main/adapters.fa)). - Host genome sequences in FASTA (if host_genome: "yes"), in case you work with host-associated metagenomes (e.g. human gut metagenome). ## Download Genome Taxonomy Database (GTDB) GTDB-Tk requires ~66G+ of external data (GTDB) that need to be downloaded and unarchived. Because this database is voluminous, we let you decide where you want to store it. SnakeMAGs do not download automatically GTDB, you have to do it: ``` #Download the latest release (tested with release207) #Note: SnakeMAGs uses GTDBtk v2.1.0 and therefore require release 207 as minimum version. See https://ecogenomics.github.io/GTDBTk/installing/index.html#installing for details. wget https://data.gtdb.ecogenomic.org/releases/latest/auxillary_files/gtdbtk_v2_data.tar.gz #Decompress tar -xzvf *tar.gz #This will create a folder called release207_v2 ``` All you have to do now is to indicate the path to the database folder (in our example, the folder is called release207_v2) in the config file, Classification section. ## Download the GUNC database (required if gunc: "yes") GUNC accepts either a progenomes or GTDB based reference database. Both can be downloaded using the ```gunc download_db``` command. For our study we used the default proGenome-derived GUNC database. It requires less resources with similar performance. ``` conda activate # Install and activate GUNC environment conda create --prefix /path/to/gunc_env conda install -c bioconda metabat2 --prefix /path/to/gunc_env source activate /path/to/gunc_env #Download the proGenome-derived GUNC database (tested with gunc_db_progenomes2.1) #Note: SnakeMAGs uses GUNC v1.0.5 gunc download_db -db progenomes /path/to/GUNC_DB ``` All you have to do now is to indicate the path to the GUNC database file in the config file, Bins quality section. ## Edit config file You need to edit the config.yaml file. In particular, you need to set the correct paths: for the working directory, to specify where are your fastq files, where you want to place the conda environments (that will be created using the provided .yaml files available in [SnakeMAGs_conda_env directory](https://github.com/Nachida08/SnakeMAGs/tree/main/SnakeMAGs_conda_env)), where are the adapters, where is GTDB and optionally where is the GUNC database and where is your host genome reference. Lastly, you need to allocate the proper computational resources (threads, memory) for each of the main steps. These can be optimized according to your hardware. Here is an example of a config file: ``` ##################################################################################################### ##### _____ ___ _ _ _ ______ __ __ _______ _____ ##### ##### / ___| | \ | | /\ | | / / | ____| | \ / | /\ / _____| / ___| ##### ##### | (___ | |\ \ | | / \ | |/ / | |____ | \/ | / \ | | __ | (___ ##### ##### \___ \ | | \ \| | / /\ \ | |\ \ | ____| | |\ /| | / /\ \ | | |_ | \___ \ ##### ##### ____) | | | \ | / /__\ \ | | \ \ | |____ | | \/ | | / /__\ \ | |____|| ____) | ##### ##### |_____/ |_| \__| /_/ \_\ |_| \_\ |______| |_| |_| /_/ \_\ \______/ |_____/ ##### ##### ##### ##################################################################################################### ############################ ### Execution parameters ### ############################ working_dir: /path/to/working/directory/ #The main directory for the project raw_fastq: /path/to/raw_fastq/ #The directory that contains all the fastq files of all the samples (eg. sample1_R1.fastq & sample1_R2.fastq, sample2_R1.fastq & sample2_R2.fastq...) suffix_1: "_R1.fastq" #Main type of suffix for forward reads file (eg. _1.fastq or _R1.fastq or _r1.fastq or _1.fq or _R1.fq or _r1.fq ) suffix_2: "_R2.fastq" #Main type of suffix for reverse reads file (eg. _2.fastq or _R2.fastq or _r2.fastq or _2.fq or _R2.fq or _r2.fq ) ########################### ### Conda environnemnts ### ########################### conda_env: "/path/to/SnakeMAGs_conda_env/" #Path to the provided SnakeMAGs_conda_env directory which contains the yaml file for each conda environment ######################### ### Quality filtering ### ######################### email: name.surname@your-univ.com #Your e-mail address threads_filter: 10 #The number of threads to run this process. To be adjusted according to your hardware resources_filter: 150 #Memory according to tools need (in GB) ######################## ### Adapter trimming ### ######################## adapters: /path/to/working/directory/adapters.fa #A fasta file contanning a set of various Illumina adaptors (this file is provided and is also available on github) trim_params: "2:40:15" #For further details, see the Trimmomatic documentation threads_trim: 10 #The number of threads to run this process. To be adjusted according to your hardware resources_trim: 150 #Memory according to tools need (in GB) ###################### ### Host filtering ### ###################### host_genome: "yes" #yes or no. An optional step for host-associated samples (eg. termite, human, plant...) threads_bowtie2: 50 #The number of threads to run this process. To be adjusted according to your hardware host_genomes_directory: /path/to/working/host_genomes/ #the directory where the host genome is stored host_genomes: /path/to/working/host_genomes/host_genomes.fa #A fasta file containing the DNA sequences of the host genome(s) threads_samtools: 50 #The number of threads to run this process. To be adjusted according to your hardware resources_host_filtering: 150 #Memory according to tools need (in GB) ################ ### Assembly ### ################ threads_megahit: 50 #The number of threads to run this process. To be adjusted according to your hardware min_contig_len: 1000 #Minimum length (in bp) of the assembled contigs k_list: "21,31,41,51,61,71,81,91,99,109,119" #Kmer size (for further details, see the megahit documentation) resources_megahit: 250 #Memory according to tools need (in GB) ############### ### Binning ### ############### threads_bwa: 50 #The number of threads to run this process. To be adjusted according to your hardware resources_bwa: 150 #Memory according to tools need (in GB) threads_samtools: 50 #The number of threads to run this process. To be adjusted according to your hardware resources_samtools: 150 #Memory according to tools need (in GB) seed: 19860615 #Seed number for reproducible results threads_metabat: 50 #The number of threads to run this process. To be adjusted according to your hardware minContig: 2500 #Minimum length (in bp) of the contigs resources_binning: 250 #Memory according to tools need (in GB) #################### ### Bins quality ### #################### #checkM threads_checkm: 50 #The number of threads to run this process. To be adjusted according to your hardware resources_checkm: 250 #Memory according to tools need (in GB) #bins_quality_filtering completion: 50 #The minimum completion rate of bins contamination: 10 #The maximum contamination rate of bins parks_quality_score: "yes" #yes or no. If yes bins are filtered according to the Parks quality score (completion-5*contamination >= 50) #GUNC gunc: "yes" #yes or no. An optional step to detect and discard chimeric and contaminated genomes using the GUNC tool threads_gunc: 50 #The number of threads to run this process. To be adjusted according to your hardware resources_gunc: 250 #Memory according to tools need (in GB) GUNC_db: /path/to/GUNC_DB/gunc_db_progenomes2.1.dmnd #Path to the downloaded GUNC database (see the readme file) ###################### ### Classification ### ###################### GTDB_data_ref: /path/to/downloaded/GTDB #Path to uncompressed GTDB-Tk reference data (GTDB) threads_gtdb: 10 #The number of threads to run this process. To be adjusted according to your hardware resources_gtdb: 250 #Memory according to tools need (in GB) ################## ### Abundances ### ################## threads_coverM: 10 #The number of threads to run this process. To be adjusted according to your hardware resources_coverM: 150 #Memory according to tools need (in GB) ``` # Run SnakeMAGs If you are using a workstation with Ubuntu (tested on Ubuntu 22.04): ```{bash} snakemake --cores 30 --snakefile SnakeMAGs.smk --use-conda --conda-prefix /path/to/SnakeMAGs_conda_env/ --configfile /path/to/config.yaml --keep-going --latency-wait 180 ``` If you are working on a cluster with Slurm (tested with version 18.08.7): ```{bash} snakemake --snakefile SnakeMAGs.smk --cluster 'sbatch -p --mem -c -o "cluster_logs/{wildcards}.{rule}.{jobid}.out" -e "cluster_logs/{wildcards}.{rule}.{jobid}.err" ' --jobs --use-conda --conda-frontend conda --conda-prefix /path/to/SnakeMAGs_conda_env/ --jobname "{rule}.{wildcards}.{jobid}" --latency-wait 180 --configfile /path/to/config.yaml --keep-going ``` If you are working on a cluster with SGE (tested with version 8.1.9): ```{bash} snakemake --snakefile SnakeMAGs.smk --cluster "qsub -cwd -V -q -pe thread {threads} -e cluster_logs/{rule}.e{jobid} -o cluster_logs/{rule}.o{jobid}" --jobs --use-conda --conda-frontend conda --conda-prefix /path/to/SnakeMAGs_conda_env/ --jobname "{rule}.{wildcards}.{jobid}" --latency-wait 180 --configfile /path/to/config.yaml --keep-going ``` # Test We provide you a small data set in the [test](https://github.com/Nachida08/SnakeMAGs/tree/main/test) directory which will allow you to validate your instalation and take your first steps with SnakeMAGs. This data set is a subset from [ZymoBiomics Mock Community](https://www.zymoresearch.com/blogs/blog/zymobiomics-microbial-standards-optimize-your-microbiomics-workflow) (250K reads) used in this tutoriel [metagenomics_tutorial](https://github.com/pjtorres/metagenomics_tutorial). 1. Before getting started make sure you have cloned the SnakeMAGs repository or you have downloaded all the necessary files (SnakeMAGs.smk, config.yaml, chr19.fa.gz, insub732_2_R1.fastq.gz, insub732_2_R2.fastq.gz). See the [SnakeMAGs executable](#snakemags-executable) section. 2. Unzip the fastq files and the host sequences file. ``` gunzip fastqs/insub732_2_R1.fastq.gz fastqs/insub732_2_R2.fastq.gz host_genomes/chr19.fa.gz ``` 3. For better organisation put all the read files in the same directory (eg. fastqs) and the host sequences file in a separate directory (eg. host_genomes) 4. Edit the config file (see [Edit config file](#edit-config-file) section) 5. Run the test (see [Run SnakeMAGs](#run-snakemags) section) Note: the analysis of these files took 1159.32 secondes to complete on a Ubuntu 22.04 LTS with an Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz x 40 processor, 96GB of RAM. # Genome reference for host reads filtering For host-associated samples, one can remove host sequences from the metagenomic reads by mapping these reads against a reference genome. In the case of termite gut metagenomes, we are providing [here](https://zenodo.org/record/6908287#.YuAdFXZBx8M) the relevant files (fasta and index files) from termite genomes. Upon request, we can help you to generate these files for your own reference genome and make them available to the community. NB. These steps of mapping generate voluminous files such as .bam and .sam. Depending on your disk space, you might want to delete these files after use. # Use case During the test phase of the development of SnakeMAGs, we used this workflow to process 10 publicly available termite gut metagenomes generated by Illumina sequencing, to ultimately reconstruct prokaryotic MAGs. These metagenomes were retrieved from the NCBI database using the following accession numbers: SRR10402454; SRR14739927; SRR8296321; SRR8296327; SRR8296329; SRR8296337; SRR8296343; DRR097505; SRR7466794; SRR7466795. They come from five different studies: Waidele et al, 2019; Tokuda et al, 2018; Romero Victorica et al, 2020; Moreira et al, 2021; and Calusinska et al, 2020. ## Download the Illumina pair-end reads We use fasterq-dump tool to extract data in FASTQ-format from SRA-accessions. It is a commandline-tool which offers a faster solution for downloading those large files. ``` # Install and activate sra-tools environment ## Note: For this study we used sra-tools 2.11.0 conda activate conda install -c bioconda sra-tools conda activate sra-tools # Download fastqs in a single directory mkdir raw_fastq cd raw_fastq fasterq-dump --threads --skip-technical --split-3 ``` ## Download Genome reference for host reads filtering ``` mkdir host_genomes cd host_genomes wget https://zenodo.org/record/6908287/files/termite_genomes.fasta.gz gunzip termite_genomes.fasta.gz ``` ## Edit the config file See [Edit config file](#edit-config-file) section. ## Run SnakeMAGs ``` conda activate snakemake_7.0.0 mkdir cluster_logs snakemake --snakefile SnakeMAGs.smk --cluster 'sbatch -p --mem -c -o "cluster_logs/{wildcards}.{rule}.{jobid}.out" -e "cluster_logs/{wildcards}.{rule}.{jobid}.err" ' --jobs --use-conda --conda-frontend conda --conda-prefix /path/to/SnakeMAGs_conda_env/ --jobname "{rule}.{wildcards}.{jobid}" --latency-wait 180 --configfile /path/to/config.yaml --keep-going ``` ## Study results The MAGs reconstructed from each metagenome and their taxonomic classification are available in this [repository](https://doi.org/10.5281/zenodo.7661004). # Citations If you use SnakeMAGs, please cite: > Tadrent N, Dedeine F and Hervé V. SnakeMAGs: a simple, efficient, flexible and scalable workflow to reconstruct prokaryotic genomes from metagenomes [version 2; peer review: 2 approved]. F1000Research 2023, 11:1522 (https://doi.org/10.12688/f1000research.128091.2) Please also cite the dependencies: - [Snakemake](https://doi.org/10.12688/f1000research.29032.2) : Mölder, F., Jablonski, K. P., Letcher, B., Hall, M. B., Tomkins-tinch, C. H., Sochat, V., Forster, J., Lee, S., Twardziok, S. O., Kanitz, A., Wilm, A., Holtgrewe, M., Rahmann, S., Nahnsen, S., & Köster, J. (2021) Sustainable data analysis with Snakemake [version 2; peer review: 2 approved]. *F1000Research* 2021, 10:33. - [illumina-utils](https://doi.org/10.1371/journal.pone.0066643) : Murat Eren, A., Vineis, J. H., Morrison, H. G., & Sogin, M. L. (2013). A Filtering Method to Generate High Quality Short Reads Using Illumina Paired-End Technology. *PloS ONE*, 8(6), e66643. - [Trimmomatic](https://doi.org/10.1093/bioinformatics/btu170) : Bolger, A. M., Lohse, M., & Usadel, B. (2014). Genome analysis Trimmomatic: a flexible trimmer for Illumina sequence data. *Bioinformatics*, 30(15), 2114-2120. - [Bowtie2](https://doi.org/10.1038/nmeth.1923) : Langmead, B., & Salzberg, S. L. (2012). Fast gapped-read alignment with Bowtie 2. *Nature Methods*, 9(4), 357–359. - [SAMtools](https://doi.org/10.1093/bioinformatics/btp352) : Li, H., Handsaker, B., Wysoker, A., Fennell, T., Ruan, J., Homer, N., Marth, G., Abecasis, G., & Durbin, R. (2009). The Sequence Alignment/Map format and SAMtools. *Bioinformatics*, 25(16), 2078–2079. - [BEDtools](https://doi.org/10.1093/bioinformatics/btq033) : Quinlan, A. R., & Hall, I. M. (2010). BEDTools: A flexible suite of utilities for comparing genomic features. *Bioinformatics*, 26(6), 841–842. - [MEGAHIT](https://doi.org/10.1093/bioinformatics/btv033) : Li, D., Liu, C. M., Luo, R., Sadakane, K., & Lam, T. W. (2015). MEGAHIT: An ultra-fast single-node solution for large and complex metagenomics assembly via succinct de Bruijn graph. *Bioinformatics*, 31(10), 1674–1676. - [bwa](https://doi.org/10.1093/bioinformatics/btp324) : Li, H., & Durbin, R. (2009). Fast and accurate short read alignment with Burrows-Wheeler transform. *Bioinformatics*, 25(14), 1754–1760. - [MetaBAT2](https://doi.org/10.7717/peerj.7359) : Kang, D. D., Li, F., Kirton, E., Thomas, A., Egan, R., An, H., & Wang, Z. (2019). MetaBAT 2: An adaptive binning algorithm for robust and efficient genome reconstruction from metagenome assemblies. *PeerJ*, 2019(7), 1–13. - [CheckM](https://doi.org/10.1101/gr.186072.114) : Parks, D. H., Imelfort, M., Skennerton, C. T., Hugenholtz, P., & Tyson, G. W. (2015). CheckM: Assessing the quality of microbial genomes recovered from isolates, single cells, and metagenomes. *Genome Research*, 25(7), 1043–1055. - [GTDB-Tk](https://doi.org/10.1093/BIOINFORMATICS/BTAC672) : Chaumeil, P.-A., Mussig, A. J., Hugenholtz, P., Parks, D. H. (2022). GTDB-Tk v2: memory friendly classification with the genome taxonomy database. *Bioinformatics*. - [CoverM](https://github.com/wwood/CoverM) - [Waidele et al, 2019](https://doi.org/10.1101/526038) : Waidele, L., Korb, J., Voolstra, C. R., Dedeine, F., & Staubach, F. (2019). Ecological specificity of the metagenome in a set of lower termite species supports contribution of the microbiome to adaptation of the host. *Animal Microbiome*, 1(1), 1–13. - [Tokuda et al, 2018](https://doi.org/10.1073/pnas.1810550115) : Tokuda, G., Mikaelyan, A., Fukui, C., Matsuura, Y., Watanabe, H., Fujishima, M., & Brune, A. (2018). Fiber-associated spirochetes are major agents of hemicellulose degradation in the hindgut of wood-feeding higher termites. *Proceedings of the National Academy of Sciences of the United States of America*, 115(51), E11996–E12004. - [Romero Victorica et al, 2020](https://doi.org/10.1038/s41598-020-60850-5) : Romero Victorica, M., Soria, M. A., Batista-García, R. A., Ceja-Navarro, J. A., Vikram, S., Ortiz, M., Ontañon, O., Ghio, S., Martínez-Ávila, L., Quintero García, O. J., Etcheverry, C., Campos, E., Cowan, D., Arneodo, J., & Talia, P. M. (2020). Neotropical termite microbiomes as sources of novel plant cell wall degrading enzymes. *Scientific Reports*, 10(1), 1–14. - [Moreira et al, 2021](https://doi.org/10.3389/fevo.2021.632590) : Moreira, E. A., Persinoti, G. F., Menezes, L. R., Paixão, D. A. A., Alvarez, T. M., Cairo, J. P. L. F., Squina, F. M., Costa-Leonardo, A. M., Rodrigues, A., Sillam-Dussès, D., & Arab, A. (2021). Complementary contribution of Fungi and Bacteria to lignocellulose digestion in the food stored by a neotropical higher termite. *Frontiers in Ecology and Evolution*, 9(April), 1–12. - [Calusinska et al, 2020](https://doi.org/10.1038/s42003-020-1004-3) : Calusinska, M., Marynowska, M., Bertucci, M., Untereiner, B., Klimek, D., Goux, X., Sillam-Dussès, D., Gawron, P., Halder, R., Wilmes, P., Ferrer, P., Gerin, P., Roisin, Y., & Delfosse, P. (2020). Integrative omics analysis of the termite gut system adaptation to Miscanthus diet identifies lignocellulose degradation enzymes. *Communications Biology*, 3(1), 1–12. - [Orakov et al, 2021](https://doi.org/10.1186/s13059-021-02393-0) : Orakov, A., Fullam, A., Coelho, L. P., Khedkar, S., Szklarczyk, D., Mende, D. R., Schmidt, T. S. B., & Bork, P. (2021). GUNC: detection of chimerism and contamination in prokaryotic genomes. *Genome Biology*, 22(1). - [Parks et al, 2015](https://doi.org/10.1101/gr.186072.114) : Parks, D. H., Imelfort, M., Skennerton, C. T., Hugenholtz, P., & Tyson, G. W. (2015). CheckM: Assessing the quality of microbial genomes recovered from isolates, single cells, and metagenomes. *Genome Research*, 25(7), 1043–1055. # License This project is licensed under the CeCILL License - see the [LICENSE](https://github.com/Nachida08/SnakeMAGs/blob/main/LICENCE) file for details. Developed by Nachida Tadrent at the Insect Biology Research Institute ([IRBI](https://irbi.univ-tours.fr/)), under the supervision of Franck Dedeine and Vincent Hervé." assertion.